Macros, types and conventions:

long IS_ERR(const

void *ptr) // pointer has value in “error” range (>= (unsigned

long) –MAX_ERRNO)

long IS_ERR_OR_NULL(const

void *ptr)

long PTR_ERR(const

void *ptr)

void* ERR_PTR(long error)

likely(cond)

unlikely(uncond)

BUG_ON(condition)

// can compile away, so do not create side

effects in condition

BUILD_BUG_ON(condition) //

build-time checking

typecheck(__u64,

a) // build-time checking that a is of type __u64

container_of(member_ptr,

container_typename, member_name)

BITS_PER_LONG // 32 or 64

No floating point operations in kernel (there are routines to save/restore fp context).

Interrupt stack (optional. present only on some architectures), normally 1 page (on x86/x64 – 4K).

Kernel stack: on x86/x64

usually 2 pages, i.e. 8 KB.

At the bottom of the stack is struct thread_info.

On stack overflow will silently overwrite it with disastrous consequences.

struct thread_info* current_thread_info();

current_thread_info()->preempt_count

struct task struct* task =

current_thread_info()->task;

struct task struct* task = current;

/* task->state */

TASK_RUNNING 0 // currently running or on runqueue waiting to run

TASK_INTERRUPTIBLE 1 // in interruptible sleep

TASK_UNINTERRUPTIBLE 2 // in non-interruptible sleep

__TASK_STOPPED 4 // stopped due to SIGSTOP, SIGTSTP, SIGTTIN or SIGTTOU

__TASK_TRACED 8 // being traced by debugger etc. via ptrace

/* in task->exit_state */

EXIT_ZOMBIE 16 // process has terminated, lingering around to be reaped by parent

EXIT_DEAD 32 // final state after parent collects status with wait4 or waitpid syscalls

/* in task->state again */

TASK_DEAD 64

TASK_WAKEKILL 128 // wake up on fatal signals

TASK_WAKING 256

#define TASK_KILLABLE (TASK_WAKEKILL | TASK_UNINTERRUPTIBLE)

#define TASK_STOPPED (TASK_WAKEKILL | __TASK_STOPPED)

#define TASK_TRACED (TASK_WAKEKILL | __TASK_TRACED)

#define TASK_NORMAL (TASK_INTERRUPTIBLE | TASK_UNINTERRUPTIBLE)

set_task_state(task, state)

Iterate parent tasks

(note: if parent terminates, task is reparented to member of the group or to init)

for (task = current;

task != &init_task; task = task->parent)

{ ... }

Iterate all tasks in the system (expensive):

for_each_process(task)

{ ... }

task = next_task(task);

task = prev_task(task)

Iterate over children tasks:

struct list_head* list;

list_for_each(list, ¤t->children)

{

task = list_entry(list, struct task_struct, sibling);

}

Annotation for functions that can sleep. This macro will print a stack trace if it is executed in an atomic

context (spinlock, irq-handler, ...):

might_sleep()

Modifiers (annotations):

__init place code into the section discarded after kernel or module init

__read_mostly place data in “read mostly”

section to reduce cache line interference

__write_once same as __read_mostly

__page_aligned_bss align data on page boundary and allocate it from bss-page-aligned section

notrace do not instrument this function for profiling

__irq_entry allocate code in section irqentry-text (only if graph tracer is enabled)

__kprobes allocate code in section kprobes-text (only if CONFIG_KPROBES)

asmlinkage assembler-level call linkage

__lockfunc allocate code in section spinlock-text

__kernel designate as kernel-space address

__user, __iomem, __rcu disable dereference (only used by __CHECKER__, see sparse(1))

__nocast sparse will issue warning if

attempted type-conversion to this var (e.g.

signed int value to unsigned var)

__bitwise sparse will issue

warning if __bitwise variables are mixed with other integers

__safe sparse: not used in the codebase

__force sparse: disable warning (e.g.

when casting int to bitwise)

__le32,

__be32 sparse: little

endian, big endian

__must_hold(x) sparse: the specified lock is held on function entry and exit

__acquires(x) sparse: function acquires the specified lock

__releases(x) sparse: function releases the specified lock

__acquire(x)

__release(x)

__cond_lock(x,c)

Sparse:

git://git.kernel.org/pub/scm/devel/sparse/sparse.git

make

make install

then run kernel make:

make

C=1

# run sparse only on files that need to be recompiled

make

C=2

# run sparse on all files, whether recompiled or not

make C=2 CF="-D__CHECK_ENDIAN__" # pass

optional parameters to sparse

Contexts:

·

process

(e.g. syscall), preemptible context

·

process,

non-preemptible context

· bottom-half (DPC/fork-like, but may be threaded, aka “soft interrupt” – e.g. tasklet)

· primary interrupt handler (irq, aka "top half", aka “hard interrupt”)

· PREEMPT_RT only: secondary (threaded) interrupt handler, per-IRQ thread

There are no IPL/IRQL

levels.

SOFTIRQ is executed with hardware interrupts disabled (but tasklet handler enables interrupts

before entering the tasklet routine).

Bottom-half processing can be triggered by:

· return from hardware interrupt (irq_exit)

· local_bh_enable(), including indirect calls

· ksoftirqd kernel thread (per-CPU, runs at low priority)

·

any code that explicitly calls do_softirq(),

__do_softirq(), call_softirq() or invoke_softirq(),

such as network code

Note again that Linux is NOT "true"

IPL/IRQL-based system, and local_irq_enable() or unlocking a spinlock DO NOT

cause SOFTIRQ processing to happen.

Task switch can be triggered by:

· REI to user-space

· REI to kernel-space if (current->preempt_count == 0 && need_resched())

·

unlocking a lock,

including indirect unlock or any other call to preempt_enable(),

if result is (current->preempt_count == 0 && need_resched())

· explicit voluntary call to schedule(), including yield() and blocking functions

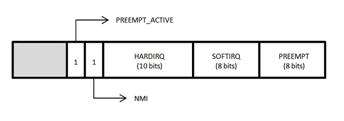

Preemption mask:

preempt_count()

≡

current_thread_info()->preempt_count

preempt_count() Returns

preemption disable count, this includes count masks added

by

currently active hardirqs, softirqs and NMI. Low byte is preempt

disable

count (if kernel is configured preemptible, otherwise 0),

byte

1 is softirq count, next 10 bits is hardirq count,

then

1 bit for NMI, then one bit for PREEMPT_ACTIVE.

There is also preempt_lazy_count (useful for PREEMPT_RT)

SOFTIRQ is changed by 1

on entering/leaving softirq processing and is changed by 2 on local_bh_enable/local_bh_disable,

to distinguish between softirq-active and softirq-disabled states

PREEMPT_ACTIVE

rescheduling is being performed (so do not reenter the scheduler)

Context control functions:

preempt_disable() increments PREEMPT field

preempt_enable() decrement PREEMT, if goes to 0, will check

for need_reschdule()

local_bh_disable() increments SOFTIRQ field by 2 (whereas entering

softirq increments it

only by 1, to distinguish between

softirq-active/softirq-disabled states)

local_bh_enable() If goes to 0, will check for pending bottom halves (softirqs)

local_irq_disable() disable hw interrupts unconditionally (on

some arch can be lazy)

local_irq_enable() enable hw interrupts unconditionally

unsigned long flags;

local_irq_save(flags) save previous state

into flags and disable hw interrupts

local_irq_restore(flags)

restore hw-interrupt-enable state from flags

Other functions:

hard_irq_disable() Really disable interrupts, rather than

relying on lazy-disabling on

some architectures.

preempt_enable_no_resched() Decrement preemption count. If goes to

0, do not check for any

pending reschedules.

in_nmi() Non-zero if executing NMI handler.

in_irq() Non-zero if currently executing primary (hardirq) hardware

interrupt handler, 0 if in process context or bottom half or NMI.

in_softirq() Non-zero if processing softirq or have BH disabled.

in_servng_softirq() Non-zero if processing softirq.

in_interrupt() Non-zero if in NMI or primary interrupt

(hardirq) or in bottom-half context, or if have BH disabled.

irqs_disabled() Non-zero

if local interrupt delivery is disabled.

in_atomic() Checks (preempt_count() & ~PREEMPT_ACTIVE), cannot sense if

spinlocks are held in

non-preemptable kernel, so use only with

great caution. Do not use in drivers.

synchronize_hardirq(irq) Wait for pending hard IRQ handlers

to complete on other CPUs

synchronize_irq(irq) Wait for pending hard & threaded IRQ

handlers to complete on other CPUs

Warning: on non-preemptible systems

(CONFIG_PREEMPT=n) spin_lock does not

change preemption mask.

Bottom halves:

top half = primary interrupt context

bottom half = deferred interrupt processing (similar to DPC or fork), can be threaded

Methods:

|

original BH |

removed in 2.5 |

|

Task queues |

removed in 2.5 |

|

Softirq |

since 2.3 |

|

Tasklet |

since 2.3 |

|

Work queues |

since 2.3 |

|

Timers |

|

Original BH (removed in 2.5):

32-bit request mask and 32 lists of requests, each level globally synchronized across all CPUs.

Task queues (removed in 2.5):

Set of queues.

Softirqs (BH):

Set of NR_SOFTIRQS statically defined bottom halves allocated at kernel compile time.

Cannot register extra softirq levels dynamically.

Handler can run on any CPU.

Rarely used directly (tasklets are more common), but tasklets are layered on Softirq.

Defined levels:

|

HI_SOFTIRQ |

0 |

High-priority tasklets |

|

TIMER_SOFTIRQ |

1 |

Timers |

|

NET_TX_SOFTIRQ |

2 |

Send network packets |

|

NET_RX_SOFTIRQ |

3 |

Receive network packets |

|

BLOCK_SOFTIRQ |

4 |

Block devices: done |

|

BLOCK_IOPOLL_SOFTIRQ |

5 |

Block devices: poll |

|

TASKLET_SOFTIRQ |

6 |

Normal priority tasklets |

|

SCHED_SOFTIRQ |

7 |

Scheduler (just inter-CPU balancing, not the same as SOFTINT IPL$_RESCHED) |

|

HRTIMER_SOFTIRQ |

8 |

High-resolution timer |

|

RCU_SOFTIRQ |

9 |

RCU callbacks |

Level to name:

extern char *softirq_to_name[NR_SOFTIRQS];

Register handler:

void my_action(struct softirq_action *);

open_softirq(HRTIMER_SOFTIRQ, my_action);

Handler runs with interrupts enabled and cannot sleep.

Raise softirq:

Marks softirq level as pending.

Temporarily disables interrupts internally.

raise_softirq(NET_TX_SOFTIRQ);

Equivalent to:

unsigned long flags;

local_irq_save(flags);

raise_softirq_irqoff(nr);

local_irq_restore(flags);

If interrupts are already disabled, can do:

raise_softirq_irqoff(NET_TX_SOFTIRQ);

Try to send a softirq to a remote cpu.

If this cannot be done, the work will be queued to the local cpu.

void send_remote_softirq(struct call_single_data* cp, int cpu, int softirq);

Softirq processing logic:

do_softirqd is invoked:

· on return from hardware interrupt (irq_exit)

· local_bh_enable()

· in the ksoftirqd kernel thread (per-CPU, runs at low priority)

·

in any code that explicitly calls do_softirq(),

__do_softirq(), call_softirq() or invoke_softirq(),

such as network code

void do_softirq(void)

{

__u32 pending;

unsigned long flags;

struct softirq_action* h;

if (in_interrupt())

return;

local_irq_save(flags);

pending = local_softirq_pending();

if (pending)

{

... on x86 and some other architectures ...

... switch to per-CPU softirq stack ...

set_softirq_pending(0);

local_irq_enable();

h = softirq_vec;

do

{

if (pending & 1)

h->action(h);

h++;

pending >>= 1;

} while (pending);

}

... recheck if still pending, and if so retry the loop few times ...

... after which wakeup softirqd for execution on a worker thread ...

local_irq_restore(flags);

}

Thus:

· Multiple softirqs (of same or different levels) can concurrently execute on different processors.

·

Single pass on the

same CPU goes from high-priority (0) to low-priority (9) softirqs, but in a

larger scheme of things low-priority softirq can get executed earlier than

high-priority softirq.

(E.g. if another processor picks up lower-priority softirq first, or if

high-priority softirq is added while scan pass is already in progress, or if

tasklets are relegated to the threaded execution, of due to ksoftirqd.)

·

softirq’s are

prioritized for ordering only (and even then are subject to postponing for ksoftirqd thread).

high-priority softirq does not interrupt lower-priority softirq.

"While a (softirq) handler runs, softirqs on the

current processor are disabled.

A softirq never preempts another softirq (on the same CPU).

The only event that can preempt a softirq is an interrupt handler."

Tasklets (BH):

Tasklets

· built on top of softirqs

· can be scheduled only as one instance at a time (and also only on one processor at a time)

· if handler is already running on CPU1 and tasklet gets scheduled again on CPU2, second instance of handler can run (on CPU2) concurrently with the first one (running on CPU1)

· handler runs with all interrupts enabled

· does not have process context (no files etc.)

· cannot sleep

· per-processor queues tasklet_vec (for regular tasklets) and tasklet_hi_vec (for high-priority tasklets)

Rules:

· if tasklet_schedule is called, then tasklet is guaranteed to be executed on some CPU at least once after this

· if tasklet is already scheduled, but its execution is still not started, it will be executed only once

· if tasklet is already running on another CPU (or schedule is called from tasklet itself), it is scheduled again

·

tasklet is strictly serialized with

respect to itself, but not to another tasklets;

for inter-tasklet synchronization, use

spinlocks.

struct tasklet_struct

{

struct tasklet_struct *next;

unsigned long state; // bits: TASKLET_STATE_SCHED (0), TASKLET_STATE_RUN (1)

atomic_t

count; // if non-zero, disabled and cannot run

// otherwise enabled and can run if scheduled

void (*func)(unsigned long);

unsigned long data;

}

#define DECLARE_TASKLET(name, func, data) \

struct tasklet_struct name = { NULL, 0, ATOMIC_INIT(0), func, data }

#define DECLARE_TASKLET_DISABLED(name, func, data) \

struct tasklet_struct name = { NULL, 0, ATOMIC_INIT(1), func, data }

void tasklet_init(struct tasklet_struct *t, void (*func)(unsigned long), unsigned long data)

int tasklet_trylock(struct tasklet_struct *t) // sets RUN bit on MP, return true if was not set

void tasklet_unlock(struct tasklet_struct *t) // clear RUN bit

void tasklet_unlock_wait(struct tasklet_struct *t) // spin while RUN bit is set

No-op on uniprocessor.

void tasklet_schedule(struct tasklet_struct *t)

void tasklet_hi_schedule(struct tasklet_struct *t)

If SCHED is already set, do nothing.

Otherwise insert into queue and signal softirq.

void tasklet_kill(struct tasklet_struct *t);

Cannot be called from interrupt.

Acquire SCHED bit (i.e. wait till owner clears it, e.g after removing from

queue), spin while RUN bit is set, clear SCHED bit.

|

tasklet state at call to tasklet_kill |

outcome |

|

not queued |

returns fine |

|

queued, disabled |

will lock up |

|

enabled, queued |

removed from queue |

|

enabled, running |

void tasklet_kill_immediate(struct tasklet_struct *t, unsigned int cpu);

Can be called only for CPU in state CPU_DEAD.

Remove tasklet even if it is in SCHED state.

void tasklet_disable_nosync(struct tasklet_struct *t)

Disables tasklet by atomic_inc(t->count) and returns immediately.

void tasklet_disable(struct tasklet_struct *t)

Disables tasklet by atomic_inc(t->count) and calls tasklet_unlock_wait().

void tasklet_enable(struct

tasklet_struct *t)

void tasklet_hi_enable(struct tasklet_struct *t)

Enables tasklet by atomic_dec(t->count).

Tasklet block:

func(data)

disable_count

flags: sched (“scheduled”), run (“currently running”)

Handler logic:

|

tasklet_schedule |

softirq handler |

|

{ sched = 1 queue + softirq } |

clear per-cpu queue

for (each entry) {

if (run) requeue: put back on the queue request softirq } else { run = 1

if (disable_count != 0) run = 0 goto requeue } else { sched = 0 func(data) run = 0 } } } |

Work queues (BH):

· items executed by a worker thread, in process context and can sleep

· default threads are per-CPU event/n, but queue can also have dedicated processing thread or threads

wq = create_workqueue("my_queue");

wq = create_singlethread_workqueue("my_queue");

wq = create_freezable_workqueue("my_queue");

NULL if fails.

create_workqueue creates queue and worker threads, one per CPU, named my_queue/n.

pre-existing queues:

|

system_wq |

Used by schedule[_delayed]_work[_on](). For short items. |

|

system_long_wq |

Similar to system_wq, but for

long items. |

|

system_freezable_wq |

Similar to system_wq, but freezable. |

|

system_unbound_wq |

Workers are not bound to any specific CPU, not concurrency managed, and all queued works are executed immediately as long as max_active limit is not reached and resources are available. |

int keventd_up(void)

Check if system_wq exists (not NULL).

void destroy_workqueue( struct workqueue_struct* wq);

void workqueue_set_max_active(wq, int max_active)

Adjust maximum number of concurrently active works.

Do not call from interrupt context.

struct workqueue_struct* wq;

work_struct work;

void my_func(struct work_struct *pWork);

DECLARE_WORK(work, myfunc); // compile-time initialization

INIT_WORK(&work, myfunc) // run-time initialization

INIT_WORK_ONSTACK(&work, myfunc) // ... same for on-stack work object

PREPARE_WORK(&work, new_myfunc) // change the routine in an already initialized descriptor

bool queue_work(wq, pWork) // queue on current CPU

bool schedule_work(pWork) // same as queue_work on system_wq

Returns TRUE if was queued, FALSE if was already on the queue.

If queue is being drained with drain_queue, will not actually insert in the queue unless called from the context of a "chained work", i.e. work executing on this queue, but will not reflect it in return status.

To pass parameters to work routine, embed struct_work in larger structure.

bool queue_work_on(int cpu, wq, pWork) // queue for processing on specified CPU

bool schedule_work_on(int cpu, pWork) // same as queue_work_on on system_wq

Returns TRUE if was queued, FALSE if was already on the

queue.

Caller must ensure the CPU cannot go away.

If queue is being drained with drain_queue, (see above).

struct delayed_work dwork

struct delayed_work *pDelayedWork

void my_delayed_work_timer_func(unsigned long data);

DECLARE_DELAYED_WORK(dwork, my_delayed_work_timer_func) // compile-time initialization

INIT_DELAYED_WORK(&dwork, my_delayed_work_timer_func) // run-time initialization

INIT_DELAYED_WORK_ONSTACK(&dwork, my_delayed_work_timer_func) // ... same for on-stack dwork object

PREPARE_ DELAYED _WORK(&dwork, new_my_delayed_func) // change the routine in an already initialized struct

/* Deferrable is similar to delayed, but may postpone waking up CPU if sleeping */

DECLARE_DEFERRABLE_WORK(dwork, my_delayed_work_timer_func) // compile-time initialization

INIT_DEFERRABLE_WORK(&dwork, my_delayed_work_timer_func) // run-time initialization

INIT_DEFERRABLE_WORK_ONSTACK(&dwork, my_delayed_work_timer_func) // ... same for on-stack dwork object

PREPARE_ DELAYED _WORK(&dwork, new_my_delayed_func) // change the routine in an already initialized struct

bool queue_delayed_work(wq, struct delayed_work *work, unsigned long delay)

bool schedule_delayed_work(struct delayed_work *work, unsigned long delay)

bool queue_delayed_work_on(int cpu, wq, struct delayed_work *work, unsigned long delay)

bool schedule_delayed_work_on(int cpu, struct delayed_work *work, unsigned long delay)

Similar to queue_work/schedule_work, but additional parameter delay (number of system ticks) specifies minimum time to delay before executing the item. If queue is being drained with drain_queue, (see above).

bool mod_delayed_work(wq, pDelayedWork, unsigned long delay)

bool mod_delayed_work_on(int cpu, wq, pDelayedWork, unsigned long delay)

Modify delay of or queue a delayed work on specific CPU or any CPU.

If pDelayedWork is idle, equivalent to queue_delayed_work_on(); otherwise, modify pDelayedWork 's timer so that it expires after delay. If delay is zero, pDelayedWork is guaranteed to be scheduled immediately regardless of its current state.

Returns FALSE if pDelayedWork was idle and queued, TRUE if pDelayedWork was pending and its timer was modified.

Safe to call from any context including interrupt handler.

bool cancel_work_sync(pWork)

Cancel pWork and wait for its execution to finish.

Can be used even if the work re-queues itself or migrates to another workqueue.

On return, pWork is guaranteed to be not pending or executing on any CPU.

Returns TRUE if pWork was pending, FALSE otherwise.

Caller must ensure that the workqueue on which pWork was last queued can't be destroyed before this function returns.

bool cancel_delayed_work_sync(pDelayedWork)

Cancel a delayed work and wait for it to finish.

Similar to cancel_work_sync(), but for delayed works.

bool cancel_delayed_work(pDelayedWork)

Cancel a pending delayed work.

Returns TRUE if pDelayedWork was pending and canceled, FALSE if wasn't pending.

Work callback function may still be running on return, unless function returns TRUE and the work doesn't re-arm itself. Explicitly flush or use cancel_delayed_work_sync() to wait on it.

Safe to call from any context including IRQ handler.

bool flush_work(pWork)

Wait for a work to finish executing the last queueing instance.

Wait until pWork has finished execution.

pWork is guaranteed to be idle on return if it hasn't been requeued since flush started.

Returns TRUE if flush_work() waited for the work to finish execution, FALSE if it was already idle.

bool flush_delayed_work(pDelayedWork)

Wait for a delayed work to finish executing the last queueing.

Delayed timer is cancelled and the pending work is queued for immediate execution.

Like flush_work(), this function only considers the last queueing instance of pDelayedWork.

Returns TRUE if waited for the work to finish execution, FALSE if it was already idle.

void flush_workqueue(wq)

void flush_scheduled_work(void) // same as flush_workqueue(system_wq)

Ensure that any scheduled work has run to completion.

Forces execution of the workqueue and blocks until its completion.

Sleep until all works which were queued on entry have been handled, but not livelocked by new incoming ones.

This is typically used in driver shutdown handlers.

void drain_workqueue(wq)

Wait until the workqueue becomes empty.

While draining is in progress, only chain queueing is allowed.

In other words, only currently pending or running work items on wq can queue further work items on it.

wq is flushed repeatedly until it becomes empty. The number of flushing is determined by the depth of chaining and should be relatively short.

int schedule_on_each_cpu(work_func_t func)

Execute a function synchronously on each online CPU

Executes func on each online CPU using the system workqueue and blocks until all CPUs have completed.

Very slow.

Returns 0 on success, -errno on failure.

int execute_in_process_context(work_func_t fn, struct execute_work* pExecuteWork)

Execute the routine within user context.

Executes the function immediately if process context is available, otherwise schedules the function for delayed execution.

Returns: 0 if function was executed immediately (i.e. was not in interrupt).

Returns: 1 if was in interrupt and attempted to schedule function for execution.

bool workqueue_congested(unsigned int cpu, wq)

Test whether wq's cpu workqueue for cpu is congested.

There is no synchronization around this function and

the test result is unreliable and only useful as advisory hints or for

debugging.

unsigned int work_cpu(pWork)

Return the last known associated cpu for pWork: CPU number if pWork was ever queued, WORK_CPU_NONE otherwise.

unsigned int work_busy(pWork)

Test whether a work is currently pending or running

There is no synchronization around this function and the test result is unreliable and only useful as advisory hints or for debugging. Especially for reentrant wqs, the pending state might hide the running state.

Returns or'd bitmask of WORK_BUSY_* bits.

Timers (BH):

Called via TIMER_SOFTIRQ .

Timers are not repetitive (once expires, need to be restarted).

void myfunc(unsigned long mydata) { ... }

[static] DEFINE_TIMER(tmr, myfunc, 0, mydata);

struct timer_list tmr;

init_timer(& tmr);

init_timer_onstack(&

tmr); // if variable is on

stack, also call destroy_timer_on_stack(& tmr)

init_timer_deferable(& tmr); // will

not casue CPU to wake up from power sleep

tmr.function = myfunc;

tmr.data = mydata;

tmr.expires = jiffies + HZ / 2;

setup_timer(&tmr, myfunc, mydata)

setup_timer_on_stack(&tmr, myfunc, mydata)

setup_deferable_timer_on_stack(&tmr, myfunc, mydata)

void add_timer(& tmr)

// must not be already pending

void add_timer_on(&tmr, int cpu)

int mod_timer(&tmr,

unsigned long expires)

Equivalent to del_timer(tmr);

tmr.expires = expires; add_timer(tmr);

The only safe way to queue timer when there are multiple unsynchronized

concurrent users of the same timer.

If timer was inactive, returns 0, if was active, returns 1.

int mod_timer_pending(&tmr, unsigned long expires)

Modify an already pending timer.

For active timers the same as mod_timer().

But will not reactivate or modify an inactive timer.

int mod_timer_pinned(&tmr, unsigned long expires)

Update the expire field of an active timer (if the timer is inactive it will be activated) and ensure that the timer is scheduled on the current CPU.

This does not prevent the timer from being migrated when the current CPU goes offline. If this is a problem for

you, use CPU-hotplug notifiers to handle it correctly, for example, cancelling the timer when the corresponding CPU goes

offline.

Equivalent to: del_timer(timer); timer->expires = expires; add_timer(timer);

int timer_pending(&tmr) { return tmr.entry.next != NULL; } // no inherent synchronization

int del_timer(&tmr)

Deactivate timer. OK to call either for active and

inactive timers.

If was inactive, return 0, otherwise (if was active) return 1.

Guarantees that the timer won't be executed in the future, but does not wait

for completion of an already running handler.

int del_timer_sync(&tmr)

Deactivate a timer and wait for the handler to finish.

Synchronization rules: Callers must prevent restarting of the timer, otherwise this function is meaningless.

It must not be called from interrupt contexts unless the timer is an irqsafe one.

The caller must not hold locks which would prevent completion of the timer's handler.

The timer's handler must not call add_timer_on().

For non-irqsafe timers, you must not hold locks that are held in interrupt

context while calling this function. Even if the lock has nothing to do with

the timer in question. Here's why:

CPU0 CPU1

---- ----

<SOFTIRQ>

call_timer_fn();

base->running_timer = mytimer;

spin_lock_irq(somelock);

<IRQ>

spin_lock(somelock);

del_timer_sync(mytimer);

while (base->running_timer == mytimer);

Now del_timer_sync() will never return and never release somelock. The interrupt on the other CPU is waiting to grab somelock but it has interrupted the softirq that CPU0 is waiting to finish.

int try_to_del_timer_sync(&tmr)

Try to deactivate a timer. Upon successful (ret >= 0) exit the timer is not queued and the handler is not running on any CPU.

Hi-res timers:

#include <linux/ktime.h>

#include <linux/hrtimer.h>

/* nanoseconds */

typedef union ktime {

s64 tv64; } ktime_t;

KTIME_SEC_MAX

ktime_t ktime_set(s64 sec, unsigned long ns)

ktime_t timespec_to_ktime(struct timespec ts)

ktime_t timespec64_to_ktime(struct timespec64

ts)

ktime_t timeval_to_ktime(struct timeval tv)

s64 ktime_to_ns(kt)

s64 ktime_to_us(kt)

s64 ktime_to_ms(kt)

struct timespec ktime_to_timespec(kt)

struct timespec ktime_to_timespec64(kt)

struct timeval ktime_to_timeval(kt)

ktime_t ktime_add(kt1, kt2)

ktime_t ktime_sub(kt1, kt2)

ktime_t ktime_add_ns(kt, unsigned long ns)

ktime_t ktime_sub_ns(kt, unsigned long ns)

ktime_t ktime_add_safe(ktime_t kt1, ktime_t kt2)

ktime_t ktime_add_ms(kt, u64 ms)

ktime_t ktime_sub_us(kt, u64 us)

int ktime_compare(kt1, kt2)

bool ktime_equal(kt1, kt2)

bool ktime_after(kt1, kt2)

bool ktime_before(kt1, kt2)

hrtimer_init(timer, CLOCK_MONOTONIC,

HRTIMER_MODE_REL);

timer->function = ftimer;

hrtimer_start(timer, ktime_set(0, ns),

HRTIMER_MODE_REL);

...

hrtimer_cancel(timer); // waits for the handler to finish

static enum hrtimer_restart ftimer(struct

hrtimer *timer)

{

missed

= hrtimer_forward_now(timer, ktime_set(0, ns);

....

return

HRTIMER_NORESTART; // or HRTIMER_RESTART

}

struct hrtimer *timer;

clockid_t which_clock =

CLOCK_MONOTONIC or CLOCK_REALTIME;

enum hrtimer_mode mode =

HRTIMER_MODE_ABS //

absolute

HRTIMER_MODE_REL //

relative to now

HRTIMER_MODE_ABS_PINNED //

absolute + bound to this CPU

HRTIMER_MODE_REL_PINNED // relative + bound to this CPU

void hrtimer_init(timer, which_clock, mode)

void hrtimer_init_on_stack(timer, which_clock,

mode)

void destroy_hrtimer_on_stack(timer)

int hrtimer_start(timer, kt, mode)

int hrtimer_start_range_ns(timer, kt

unsigned long range_ns, mode)

u64 hrtimer_forward(timer, ktime_t now,

ktime_t interval)

u64 hrtimer_forward_now(timer, ktime_t

interval)

ktime_t now =

timer->base->get_time()

ktime_t now =

hrtimer_cb_get_time(timer)

void hrtimer_set_expires(timer, kt)

void hrtimer_set_expires_range(timer, kt,

ktime_t delta) //

delta is extra tolerance

void hrtimer_set_expires_range_ns(timer, kt,

unsigned long delta_ns)

void hrtimer_add_expires(timer, kt)

void hrtimer_add_expires_ns(timer, u64 ns)

ktime_t hrtimer_get_softexpires(timer) //

without added tolerance (i.e. soft)

ktime_t hrtimer_get_expires(timer) //

with tolerance, i.e. soft + delta

ktime_t hrtimer_expires_remaining(timer)

void hrtimer_get_res(which_clock, struct

timespec *tp)

Wait queues:

Replacement for sleep/wake.

Exclusive waiters (those with WQ_FLAG_EXCLUSIVE) count against wakeup limit counter, non-exclusive do not.

wait_queue_head_t wq;

init_waitqueue_head(& wq);

Sample wait cycle:

[static] DEFINE_WAIT(mywait); // create wait queue entry

add_wait_queue(&wq, &mywait); // add it to wait queue

while (! (condition))

{

/* sets current task state */

/* TASK_INTERRUPTIBLE: signal wakes process up */

/* TASK_UNINTERRUPTIBLE: does not */

prepare_to_wait(&wq, &mywait, TASK_INTERRUPTIBLE);

if (signal_pending(current))

... handle signal ...

schedule();

}

finish_wait(&wq, &mywait); // sets task state to TASK_RUNNING and removed mywait off wq

Note: instead of default_wake_function can supply own wake function in mywait.func(mywait.private).

Helper macros:

void wait_event(&wq, condition)

Uninterruptible wait.

void wait_event_lock_irq(&wq, condition, spinlock_t lock)

void wait_event_lock_irq_cmd(&wq, condition, spinlock_t lock, cmd)

Uninterruptible wait.

Condition checked under the lock.

Expected to be called with the lock held, returns with the lock held.

Command cmd is executed right before going to sleep.

int wait_event_interruptible(&wq, condition)

int wait_event_interruptible_lock_irq(&wq, condition, spinlock_t lock)

int

wait_event_interruptible_lock_irq_cmd(&wq,

condition, spinlock_t lock, cmd)

Interruptible wait.

Returns 0 if condition evaluated to true, or -ERESTARTSYS if was interrupted.

Condition checked under the lock.

Expected to be called with the lock held, returns with the lock held.

Command cmd is executed right before going to sleep.

long wait_event_timeout(&wq, condition, timeout) // timeout is in jiffies

Uninterruptible wait with timeout.

Returns 0 if timeout elapsed, otherwise remaining time.

long wait_event_interruptible _timeout(&wq, condition, timeout) // timeout is in jiffies

Interruptible wait with

timeout.

Returns -ERESTARTSYS if sleep was interrupted, 0 if timeout elapsed, otherwise

remaining time.

int wait_event_interruptible_exclusive(&wq, condition)

Interruptible exclusive

wait.

Returns 0 if condition evaluated to true, or -ERESTARTSYS if was interrupted.

void __add_wait_queue_exclusive(&wq, &mywait)

void __add_wait_queue_tail_exclusive(&wq,

&mywait)

void add_wait_queue_exclusive(&wq,

&mywait)

void prepare_to_wait_exclusive(&wq, &mywait, TASK_[UN]INTERRUPTIBLE)

General routines/macros to set up for exclusive wait.

int wait_event_interruptible_exclusive_locked(&wq, condition)

int wait_event_interruptible_exclusive_locked_irq(&wq, condition)

Interruptible wait.

Must be called with wq.lock

held.

Spinlock is unlocked while sleeping but condition testing is done while the

lock

is held and when this macro exits the lock is held.

wait_event_interruptible_exclusive_locked

uses spin_lock()/spin_unlock().

wait_event_interruptible_exclusive_locked_irq

uses spin_lock_irq()/spin_unlock_irq().

Returns -ERESTARTSYS if sleep was interrupted, 0 if condition was satisfied.

int wait_event_killable(&wq, &mywait)

Semi-interruptible (only

by fatal signals) wait with timeout.

Returns 0 if condition evaluated to true, or -ERESTARTSYS if was interrupted.

int

wait_on_bit(void *word, int bit, int (*action)(void *),

unsigned sleepmode)

int wait_on_bit_lock(void *word, int bit, int (*action)(void

*), unsigned sleepmode)

wait_on_bit : wait for a bit to be cleared.

wait_on_bit_lock : wait for a bit to be cleared when wanting to set it.

action is a function used to sleep.

Wake up:

wake_up_all(&wq); // wakes up all waiters

wake_up(&wq);

// wakes up upto one waiter, same as wake_up_nr(&wq, 1)

wake_up_nr(&wq,

5);

// wakes up upto specified number of waiters

Only exclusive waiters (with WQ_FLAG_EXCLUSIVE) count against the counter.

/* same but with queue spinlock held */

unsigned long flags;

spin_lock_irqsave(&q->lock, flags);

wake_up_locked(&wq);

// wakes up upto one waiter

wake_up_all locked(&wq);

// wakes up upto one waiter

spin_unlock_irqrestore(&q->lock, flags);

void wake_up_bit(void* word, int bit)

Wake up waiter on a bit.

Remove from wait queue:

void remove_wait_queue(&wq, &mywait)

Completion variables:

Wait queue plus "done" flag.

struct completion {

unsigned int done;

wait_queue_head_t wait;

};

struct competion comp;

DECLARE_COMPLETION(comp)

DECLARE_COMPLETION_ONSTACK(comp)

#define INIT_COMPLETION(x) ((x).done

=

0)

// reinitialize for reuse

void init_completion(& comp)

void wait_for_completion(&comp)

int wait_for_completion_interruptible(&comp)

int wait_for_completion_killable(&comp)

unsigned long wait_for_completion_timeout(&comp, unsigned long timeout)

long wait_for_completion_interruptible_timeout(&comp, unsigned long timeout)

long wait_for_completion_killable_timeout(&comp, unsigned long timeout)

bool try_wait_for_completion(&comp)

bool completion_done(&comp)

void complete(&comp)

void complete_all(&comp)

Atomic operations on 32-bit counters:

#define ACCESS_ONCE(x) (*(volatile typeof(x) *)&(x)) // note: does not imply memory barrier

typedef struct { volatile int counter; } atomic_t;

atomic_t v = ATOMIC_INIT(77);

int i;

int atomic_read(pv, i) // no implied barriers !

void atomic_set(pv, i) // ...

void atomic_add(i,

pv)

// ...

void atomic_sub(i,

pv)

// ...

void atomic_inc(pv)

// ...

void atomic_dec(pv)

// ...

No implied barriers (compiler or memory) !!

For some operations, use helper functions

void smp_mb__before_atomic_dec()

void smp_mb__after_atomic_dec()

void smp_mb__before_atomic_inc()

void smp_mb__after_atomic_inc()

For others use smp_wmb, smp_rmb, smp_mb or barrier.

int atomic_sub_and_test(i,

pv)

// true if result is zero, performs memory barriers before and after

int atomic_dec_and_test(pv)

// ...

int atomic_inc_and_test(pv)

// ...

int atomic_add_return(i,

pv)

// return result, performs memory barriers before and after

int atomic_sub_return(i,

pv)

// ...

int atomic_inc_return(pv)

// ...

int atomic_dec_return(pv)

// ...

int atomic_add_negative(i, pv) // *pv += i; return true if result is negative, performs memory barriers before and after

int atomic_xchg(pv, i) // returns previous value, performs memory barriers before and after

int atomic_cmpxchg(pv, old, new) // CAS, returns actual old value, performs memory barriers before and after

int atomic_add_unless(pv,

int a, int

mark)

// if (*pv != mark) { *pv += a; return true; } else { return false;

} and does barriers

void atomic_clear_mask(bitmask, pv)

void atomic_set_mask(bitmask, pv)

int _atomic_dec_and_lock(pv , spinlock_t *lock)

Atomically

decrement *pv and if it drops to zero, atomically acquire lock and

return true.

If does not drop to zero, just decrement, do not acquire lock and return false.

Atomic operations on 64-bit counters:

Most 32-bit architectures do not support 64-bit atomic operations, although x86_32 does.

atomic64_t

long

ATOMIT64_INIT(...)

atomic64_read(...)

etc.

Atomic operations on bits:

void set_bit(unsigned long nr, volatile unsigned long *addr) // no barriers implied

void clear_bit(unsigned long nr, volatile unsigned long *addr) // ...

void change_bit(unsigned long nr, volatile unsigned long *addr) // ... (flips the bit)

void smp_mb__before_clear_bit(void)

void smp_mb__after_clear_bit(void)

int test_bit(unsigned long nr, __const__ volatile unsigned long *addr);

int test_and_set_bit(unsigned long nr, volatile unsigned long *addr) // returns boolean, performs memory barriers

int test_and_clear_bit(unsigned long nr, volatile unsigned long *addr)

int test_and_change_bit(unsigned long nr, volatile unsigned long *addr)

int test_and_set_bit_lock(unsigned long nr, unsigned long *addr) // acquire-release semantics

void clear_bit_unlock(unsigned

long nr, unsigned long

*addr)

// ...

Non-atomic operations on bits:

void __set_bit(unsigned long nr, volatile unsigned long *addr)

void __clear_bit(unsigned long nr, volatile unsigned long *addr)

void __change_bit(unsigned long nr, volatile unsigned long *addr)

int __test_and_set_bit(unsigned long nr, volatile unsigned long *addr)

int __test_and_clear_bit(unsigned long nr, volatile unsigned long *addr)

int __test_and_change_bit(unsigned long nr, volatile unsigned long *addr)

void __clear_bit_unlock(unsigned long nr, unsigned long *addr) // implements release barrier

unsigned long find_first_bit(const unsigned long *addr, unsigned long size)

unsigned long find_first_zero_bit(const unsigned long *addr, unsigned long size)

unsigned long find_next_bit(const unsigned long *addr, unsigned long size, unsigned long offset)

if not found, returns value >= size.

int

ffs(int x)

int ffz(int x)

Memory barriers:

rmb, wmb, mb, read_barrier_depends – emit on UP and MP

smp_rmb, smp_wmb, smp_mb, smp_read_barrier_depends – emit MP ; on

UP emit only barrier()

barrier() – compiler barrier

p = ...

read_barrier_depends();

// a weaker form of read barrier, only affecting dependent data

a = *p;

[can drift down]

LOCK barrier

[cannot drift up]

[cannot drift down]

UNLOCK barrier

[can drift up]

Therefore UNLOCK + LOCK = full MB, but LOCK + UNLOCK is not (may drift into the middle and get reverse-ordered there).

smp_mb__before_spinlock() does not let stores before critical section to dirft inside it

set_current_state(TASK_UNINTERRUPTIBLE) does: smp_mb(),

current->state = TASK_UNINTERRUPTIBLE

wake_up does smp_mb

mmiowb() – order writes to IO space (PCI etc.)

Spinlocks:

Not recursive. Disables preemption on local CPU. Optionally disables hardware interrupts or bottom-half processing. While holding a spinlock, must not do anything that will cause sleep. Do not touch pageable (e.g. user) memory, kmalloc(GFP_KERNEL), any semaphore functions or any of the schedule functions, down_interruptible() and down(). Ok to call down_trylock() and up() as they do never sleep. Initialization: spinlock_t lock = SPIN_LOCK_UNLOCKED; // obsolete, incompatible with RT Or: DEFINE_SPINLOCK(name)

Or: void spin_lock_init(&lock);

Disable-restore interrupts on local CPU: unsigned long flags;spin_lock_irqsave(&lock, flags);spin_unlock_irqrestore(&lock, flags); Performs local_irq_save(); preempt_disable(). If knew for sure interrupts were enabled, can do instead: spin_lock_irq(&lock);spin_unlock_irq(&lock); Performs local_irq_disable(); preempt_disable(). If spinlock is never acquired from interrupt handle, can use the form that does not disable interrupts: spin_lock(&lock);spin_unlock(&lock); int spin_trylock(&lock); Performs preempt_disable(). Disable bottom half processing, but do not disable hardware interrupts: spin_lock_bh(&lock);spin_unlock_bh(&lock); int spin_trylock_bh(&lock); Performs local_bh_disable(); preempt_disable(). Nested forms for lockdep: spin_lock_nested(&lock, subclass)spin_lock_irqsave_nested(&lock, flags, subclass) spin_lock_nest_lock(&lock, nest_lock) // signal: ok to take lock despite already holding nest_lock of the same class

RW spinlocks:

Not recursive.Disables preemption on local CPU.Optionally disables hardware interrupts or bottom-half processing. No reader -> writer conversion.Readers are favored over writers and can starve them, in this case plain spinlock_t may be prefereable to rwlock_t. While holding a spinlock, must not do anything that will cause sleep. Do not touch pageable (e.g. user) memory, kmalloc(GFP_KERNEL), any semaphore functions or any of the schedule functions, down_interruptible() and down(). Ok to call down_trylock() and up() as they do never sleep. Initialization: rwlock_t lock = RW_LOCK_UNLOCKED; // obsolete, incompatible with RT Or: DEFINE_RWLOCK(lock)

Or: void rwlock_init(&lock);

Disable-restore interrupts on local CPU: unsigned long flags;read_lock_irqsave(&lock, flags);read_unlock_irqrestore(&lock, flags); write_lock_irqsave(&lock, flags);write_unlock_irqrestore(&lock, flags); Performs local_irq_save(); preempt_disable(). If knew for sure interrupts were enabled, can do instead: read_lock_irq(&lock);read_unlock_irq(&lock); write_lock_irq(&lock);write_unlock_irq(&lock); Performs local_irq_disable(); preempt_disable(). If spinlock is never acquired from interrupt handle, can use the form that does not disable interrupts: read_lock(&lock);read_unlock(&lock); write_lock(&lock);write_unlock(&lock); int read_trylock(&lock);int write_trylock(&lock); Performs preempt_disable(). Disable bottom half processing, but do not disable hardware interrupts: read_lock_bh(&lock);read_unlock_bh(&lock); write_lock_bh(&lock);write_unlock_bh(&lock); Performs local_bh_disable(); preempt_disable().

Local-global spinlock:

· Global data: very fast and scalable read locking, but very slow write locking.

· Per-CPU data: fast write locking on local CPU, possible write locking of other CPU’s data, very slow write locking of all CPUs data.

struct lglock lg;

DEFINE_STATIC_LGLOCK(lg);

void lg_lock_init(&lg, “mylg”);

void lg_local_lock(&lg);

void lg_local_unlock(&lg);

void lg_local_lock_cpu(&lg, int cpu);

void lg_local_unlock_cpu(&lg, int cpu);

void lg_global_lock(&lg);

void lg_global_unlock(&lg);

Mutexes:

Sleepable lock (may sleep when

acquiring, can sleep while holding it).

May not use in softirq or hardirq context.

Non-recursive.

Task may not exit with mutex held.

struct mutex mtx;

[static] DEFINE_MUTEX(mtx);

void mutex_init(&mtx);

void mutex_destroy(&mtx);

void mutex_lock(&mtx)

int mutex_trylock(&mtx)

void mutex_unlock(&mtx) //

must be unlocked by owner only

int mutex_is_locked(&mtx)

int mutex_lock_interruptible(&mtx) // 0 if acquired, otherwise -EINTR

int mutex_lock_killable(&mtx) // …

int atomic_dec_and_mutex_lock(atomic_t* cnt, &mtx)

If decrements cnt to 0, return true holding mutex.

Otherwise return false not holding mutex.

If locking more than one mutex of the same lock validation class, lockdep will complain. To lock multiple mutexes of the same class, designate their subclasses and lock with the following functions.

unsigned int subclass;

void mutex_lock_nested(&mtx, subclass)

void mutex_lock_interruptible_nested(&mtx, subclass)

void mutex_lock_killable_nested(&mtx,

subclass)

void mutex_lock_nest_lock(&mtx,

nest_lock) // signal: ok to take mtx despite already

holding nest_lock of the same class

Mutexes with priority inheritance:

struct rt_mutex rmx;

[static] DEFINE_RT_MUTEX(rmx);

void rt_mutex_init(&rmx)

void rt_mutex_destroy(&rmx)

void rt_mutex_lock(&rmx)

int rt_mutex_trylock(&rmx)

void rt_mutex_unlock(&rmx)

int rt_mutex_is_locked(&rmx)

int rt_mutex_timed_lock(&rmx, struct hrtimer_sleeper *timeout, int detect_deadlock)

success = 0, otherwise –EINTR or –ETIMEOUT or -EDEADLK

int rt_mutex_lock_interruptible(&rmx, int detect_deadlock)

success = 0, otherwise –EINTR or -EDEADLK

Semaphores:

Sleepable lock (may sleep when

acquiring, can sleep while holding it).

May not use in softirq or hardirq context.

struct semaphore sem;

[static] DEFINE_SEMAPHORE(sem);

void sema_init(&sem, int val) // val is maximum number of holders

void down(&sem) // acquire

int down_trylock(&sem) // inverted result: 0 if acquired, 1 if did not

int down_interruptible(&sem) // 0 or -EINTR

int down_killable(&sem) // 0 or -EINTR

int down_timeout(&sem, long jiffies) // 0 or -ETIME

void up(&sem) // release

RW locks (RW semaphores):

Any number of readers, up to 1 writer.

Non-recursive.

Sleepable lock (may sleep when acquiring, can sleep while holding it).

May not use in softirq or hardirq context.

There is no interrptible/killable wait, all wait is uninterruptible.

struct rw_semaphore rws;

[static] DECLARE_SEMAPHORE(rws);

void init_rwsem(&rws)

void down_read(&rws) // acquire

void down_write(&rws)

int down_read_trylock(&rws) // true = ok, false = failure

int down_write_trylock(&rws)

void up_read(&rws) // release

void up_write(&rws)

int rwsem_is_locked(&rws) // true if locked

void downgrade_write(&rws) // downgrade write lock to read lock

If locking more than one RW semaphore of the same lock validation class, lockdep will complain. To lock multiple mutexes of the same class, designate their subclasses and lock with the following functions.

void down_read_nested(&rws, int subclass)

void down_write_nested(&rws, int subclass)

void down_write_nest_lock(&rws, nest_lock) // signal: ok to take rws despite already holding nest_lock of the same class

Seqlocks:

Sequential locks.

· Good when lots of readers, few writers

· Writers take priority over readers

· So readers cannot starve writers

"Write" side (in write_seqlock_xxx)

internally uses spinlock and runs locked code with spinlock held.

"Read" side does not use spinlock.

"Write" side is subject to lock validator, "read" side is

not.

Internally increments a variable inside the lock. When it is odd, write lock is

held.

|

Writer usage |

Reader usage |

|

... write data ... write_sequnlock(&sq) |

start = read_seqbegin(&sq);

... read data ... |

seqlock_t sq;

[static] DEFINE_SEQLOCK(sq);

unsigned int start;

void seqlock_init(& sq)

void write_seqlock(&sq)

int write_tryseqlock(&sq) // true if locked

void write_sequnlock(&sq)

void write_seqlock_irqsave(&sq, flags)

void write_sequnlock_irqrestore(&sq, flags)

void write_seqlock_irq(&sq)

void write_sequnlock_irq(&sq)

void write_seqlock_bh(&sq)

void write_sequnlock_bh(&sq)

void write_seqcount_begin(seqcount_t

*s)

// caller uses his own mutex or other lock, built-in spinlock is not used

void write_seqcount_end(seqcount_t *s) // ...

void write_seqcount_barrier(seqcount_t *s) // invalidate in-progress read operations

start = read_seqbegin(const &sq)

int read_seqretry(const &sq, unsigned start)

start = read_seqbegin_irqsave(&sq, flags)

start = read_seqretry_irqrestore(&sq, iv, flags)

start = __read_seqcount_begin(const seqcount_t *s) // like read_seqbegin, but does not have smp_rmb at the end

start = read_seqcount_begin(const seqcount_t *s) // equivalent to read_seqbegin

start = raw_seqcount_begin(const seqcount_t *s) // get seqcount without waiting for it to go even, plus smp_rmb

int __read_seqcount_retry(const

seqcount_t *s, unsigned start) // like read_seqretry,

but does not issue smp_rmb

//

(caller must issue rmb before the call)

int read_seqcount_retry(const seqcount_t *s, unsigned start) // equivalent to read_seqretry

Lock validator (lockdep):

http://lwn.net/Articles/185666/

Documentation/lockdep-design.txt

Enable CONFIG_DEBUG_LOCK_ALLOC.

Every lock is assigned a class (= “key address”):

· For statically allocated lock it is the address of the lock

· For dynamic locks it is the spot of their init (spin_lock_init, mutex_init etc.) routine

Validator checks for:

· ClassA lock taken after ClassB if previously locks of these two classes were ever taken in inverse order

· ClassX lock is taken when ClassX lock is already held.

o

Exemption: use functions

like mutex_lock_nested and specify subclass.

Validator treats “class+subclass” as a separate class.

·

Locks released in the

order that is not reverse of their acquisition.

(A stack of currently-held locks is maintained, so any lock being released

should be at the top of the stack; anything else means that something strange

is going on.)

· Any (spin)lock ever acquired by hardirq can never be acquired when interrupts (hardirqs) are enabled.

o

Conversely: any lock ever

acquired with hardirqs enabled is “hardirq-unsafe”

and cannot be acquired in hardirq.

· Any (spin)lock ever acquired by softirq can never be acquired when softirqs are enabled.

o Conversely: any lock ever acquired with softirqs enabled is “softirq-unsafe” and cannot be acquired in hardirq.

· Cannot: (holding) hardirq-safe -> (acquire) hardirq-unsafe.

o

Since hardirq-unsafe

-> hardirq-safe is valid, so reverse is invalid, as it would cause lock

inversion.

May be too strong, but it is checked against nevertheless.

· Cannot: (holding) softirq-safe -> (acquire) softirq-unsafe.

Gotchas:

· Unloading of kernel modules causes class ids to be available for reuse and can produce false warning and leakage of class table (that can overflow and result in a message).

· Static initialization of large number of locks (e.g. array of structures with per-entry lock) can cause class table to overflow. Solution: initialize locks dynamically.

Aids:

· /proc/lockdep_stats

· /proc/lockdep

Kernel threads:

struct task_struct* task;

task =

kthread_create(thread_func, void* data, const char namefmt*, ...);

if (! IS_ERR(task)) wake_up_process(task);

Initially SCHED_NORMAL, nice 0.

Can alter with sched_setscheduler[_nocheck](…).

// create thread bound to NUMA memory node

task = kthread_create_on_node(thread_func, void* data, const char namefmt*,

cpu_to_node(cpu), ...);

if (! IS_ERR(task)) wake_up_process(task);

task = kthread_run(thread_func, void* data, const char namefmt*, ...);

int retval = kthread_stop(task);

Returns thread's retval or -EINTR if the process had never started.

int thread_func(void* data)

{

/* check if kthread_stop had been called */

if (kthread_should_stop())

return retval; /* return status to kthread_stop caller */

do_exit();

}

Priority ranges:

task_struct* p;

p->prio

All:

0 (highest) … MAX_PRIO-1 (lowest, 139)

MAX_PRIO = 140

Realtime:

0

(highest) … MAX_RT_PRIO-1 (lowest, 99)

MAX_PRIO = MAX_USER_RT_PRIO = 100

Timeshared:

MAX_RT_PRIO (100) … MAX_PRIO-1 (139)

Default priority for irq threads (PREEMPT_RT):

MAX_USER_RT_PRIO/2 (50)

CPUs:

NR_CPUS – maximum number in the system // eventually will be run-time variable nr_cpu_ids (nr_cpu_ids <= NR_CPUS)

unsigned long my_percpu[NR_CPUS];

int cpu = get_cpu(); // disable preemption and get current processor

my_percpu[cpu]++;

put_cpu(); // enable preemption

int smp_processor_id(); // unsafe if preemption is not disabled

Per-CPU data interface, static form usable only in kernel but not in loadable modules:

DECLARE_PER_CPU(vartype,

varname)

// declare per-CPU variable (not required locally, DEFINE_PER_CPU is ok)

DEFINE_PER_CPU(vartype, varname)

// define and allocate per-CPU variable

DECLARE_PER_CPU_SHARED_ALIGNED(...)

// cacheline aligned

DEFINE_PER_CPU_SHARED_ALIGNED(...)

DECLARE_PER_CPU_ALIGNED(...)

// cacheline aligned

DEFINE_PER_CPU_ ALIGNED(...)

DECLARE_PER_CPU_PAGE_ALIGNED(...)

// page-aligned

DEFINE_PER_CPU_ PAGE_ALIGNED(...)

EXPORT_PER_CPU_SYMBOL(varname)

// export for use by modules

EXPORT_PER_CPU_SYMBOL_GPL(varname)

get_cpu_var(varname)++; // disable preemption, access variable and increment it

put_cpu_var(varname); // reenable preemption

per_cpu(varname, cpu) = 0; // access per-cpu variable instance for particular CPU (does not disable

// preemption and provides by itself no locking)

Per-CPU data interface, dynamic form usable both in kernel and in loadable modules:

void* alloc_percpu(vartype); // on failure returns NULL

void* __alloc_percpu(size_t size, size_t align);

void free_percpu(void* data);

A* p = alloc_percpu(A); // same as below

A* p = alloc_percpu(sizeof(A), __alignof(__A)); // ...

Then can do get_cpu_var(p) and put_cpu_var(p).

this_cpu_read , this_cpu_write, this_cpu_add, this_cpu_sub etc. – operations performed with interrupts or preemption disabled

CPU sets:

typedef struct cpumask { DECLARE_BITMAP(bits, NR_CPUS);

} cpumask_t;

cpumask_t cpumask;

unsigned long* bp = coumask_bits(& cpumask);

const struct cpumask *const cpu_possible_mask; // populatable, e.g. can be hot-plugged, fixed at boot time

const struct cpumask *const cpu_present_mask; // populated

const struct cpumask *const cpu_online_mask; // available to scheduler

const struct cpumask *const cpu_active_mask; // available to migration (a subset of online, currently identical)

#define num_online_cpus() cpumask_weight(cpu_online_mask)

#define num_possible_cpus() cpumask_weight(cpu_possible_mask)

#define num_present_cpus() cpumask_weight(cpu_present_mask)

#define num_active_cpus() cpumask_weight(cpu_active_mask)

#define cpu_online(cpu) cpumask_test_cpu((cpu), cpu_online_mask)

#define cpu_possible(cpu) cpumask_test_cpu((cpu), cpu_possible_mask)

#define

cpu_present(cpu)

cpumask_test_cpu((cpu), cpu_present_mask)

#define

cpu_active(cpu) cpumask_test_cpu((cpu),

cpu_active_mask)

#define

cpu_is_offline(cpu)

unlikely(!cpu_online(cpu))

cpumask_t mask = { CPU_BITS_NONE };

mask_t mask = cpu_none_mask;

mask_t mask = cpu_all_mask;

cpumask_set_cpu(unsigned int cpu, & mask)

cpumask_clear_cpu(unsigned int cpu, & mask)

int cpumask_test_cpu(unsigned int cpu, & mask)

int cpumask_test_and_set_cpu(unsigned int cpu, & mask)

int cpumask_test_and_clear_cpu(unsigned int cpu, & mask)

cpumask_clear(&mask);

cpumask_setall(& mask);

cpumask_and(&mask,

&m1, &m2);

cpumask_or(&mask, &m1, &m2);

cpumask_xor(&mask, &m1, &m2);

cpumask_andnot(&mask, &m1, &m2);

cpumask_complement(&mask, &m1);

bool cpumask_[is]empty(& mask);

bool cpumask_[is]subset(& mask, & superset_mask);

#define cpu_isset(cpu, cpumask) test_bit((cpu), (cpumask).bits)

cpumask_copy(&dst,

&src);

bool cpumask_equal(&m1, &m2)

int cpumask_parse_user(const

char __user *buf, int len,

&mask)

// 0 = ok, otherwise -errno

int cpumask_parselist_user(const char __user *buf, int

len, &mask)

int cpulist_parse(const char *buf, & mask)

int cpumask_scnprintf(char

*buf, int len, &mask)

int cpulist_scnprintf(char *buf, int len,

&mask)

unsigned int cpumask_first(&mask)

// returns >= nr_cpu_ids if none

unsigned int cpumask_next(int n,

&mask)

// returns >= nr_cpu_ids if none

unsigned int cpumask_next_zero(int n,

&mask) // returns >= nr_cpu_ids if none

for_each_cpu(cpu, mask) {...}

// iterate over every cpu in a mask

for_each_cpu_not(cpu, mask)

{...}

// iterate over every cpu not in a mask

for_each_cpu_and(cpu, m1, m2)

{...}

// iterate over every cpu in both masks

#define for_each_possible_cpu(cpu) for_each_cpu((cpu), cpu_possible_mask)

#define for_each_online_cpu(cpu) for_each_cpu((cpu), cpu_online_mask)

#define for_each_present_cpu(cpu) for_each_cpu((cpu), cpu_present_mask)

cpumask_of_node(cpu_to_node(cpu))

Calling function on other CPUs:

void myfunc(void* info);

Handler function is invoked called on remote CPUs in

IPI interrupt context,

on local CPU – with interrupts disabled.

The following functions must be called with interrupts

enabled (or can deadlock).

They return 0 if successful, otherwise –errno (e.g. –ENXIO if CPU is not

online).

If wait is true, waits for completion of the call.

int smp_call_function_single(int

cpuid, myfunc, void *info, int wait);

int smp_call_function_any(&mask,

myfunc, void *info, int wait);

void smp_call_function_many(&mask, myfunc, void *info, bool wait);

Execute myfunc(info) on all CPUs in mask except current CPU.

Preemption must be disabled when calling smp_call_function_many.

May not call it from bottom-half or interrupt handler.

int smp_call_function(myfunc, void *info, int wait);

Call myfunc(info) on all CPUs in cpu_online_mask

except current CPU.

May not call smp_call_function from bottom-half or interrupt

handler.

Temporary disables preemption internally.

int on_each_cpu(myfunc, void *info, int wait);

Call myfunc(info) on all CPUs in cpu_online_mask

and current CPU.

May not call smp_call_function from bottom-half or interrupt

handler.

Temporary disables preemption internally.

void on_each_cpu_mask(&mask, myfunc, void *info, bool

wait);

Call myfunc(info) on all CPUs in the mask including

current CPU if it is in the mask.

May not call smp_call_function from bottom-half or interrupt

handler.

Preemption must be disabled when calling on_each_cpu_mask.

void on_each_cpu_cond(bool (*cond_func)(int cpu, void *info), myfunc, void *info, bool wait, gfp_t gfp_flags);

For each CPU in cpu_online_mask, invoke locally cond_func(cpu, info) and for those CPUs for which it returns true, perform call remotely myfunc(info) and optionally wait. Flags gpf_flags are used to allocate temporary CPU mask block.

May not call on_each_cpu_cond from bottom-half

or interrupt handler.

Temporary disables preemption internally.

Stop Machine:

int stop_machine(int (*fn)(void *), void *data, const struct cpumask *cpus)

Freeze the machine on all CPUs and execute fn(data).

This schedules a thread to be run on each CPU in the cpus mask (or each

online CPU if cpus is NULL) at the highest priority, which threads also

disable interrupts on their respective CPUs. The result is that no one is

holding a spinlock or inside any other preempt-disable region when fn

runs. This is effectively a very heavy lock equivalent to grabbing every

spinlock (and more).

fn(data) is executed only on one CPU, with interrupts disabled.

Memory zones:

|

ZONE_DMA |

pages that can be used for DMA |

x86-32: below 16 MB |

|

ZONE_DMA32 |

pages that can be used for DMA, |

|

|

ZONE_NORMAL |

normal pages, |

on x86-32: 16 MB to 896 MB |

|

ZONE_HIGHMEM |

pages not permanently mapped into kernel memory |

on x86-32: all memory above 896 MB |

|

ZONE_MOVABLE |

(related to hot-plugging) |

|

Normal allocations are usually satisfied from ZONE_NORMAL, but on shortage can

satisfy from ZONE_DMA.

x64 has only ZONE_DMA, ZONE_DMA32 and ZONE_NORMAL.

Memory allocation (kmaloc):

void* kmalloc(size_t

size, gfp_t flags)

kfree(p)

void* kmalloc_array(size_t n, size_t size, gfp_t flags)

void* kcalloc(size_t n, size_t size, gfp_t flags) // zeroes out

void* kzalloc(size_t size, gfp_t flags) // zeroes out

void* kmalloc_node(size_t size, gfp_t flags, int node) // from specific NUMA node

void* kzalloc_node(size_t size, gfp_t flags, int node) // zeroes out

Common flag combinations:

|

GFP_KERNEL |

Default, most usual. |

|

GFP_ATOMIC |

High-priority allocation. Does not

sleep. |

|

GFP_NOWAIT |

Like GFP_ATOMIC, but will not fallback to emergency pool. |

|

GFP_NOIO |

Like GFP_KERNEL, but will not do block

IO. |

|

GFP_NOFS |

Like GFP_KERNEL (can block and initiate

disk IO), but will not do file system IO. |

|

GFP_USER |

Normal allocation, can block. |

|

GFP_HIGHUSER |

Allocate from ZONE_HIGHMEM. Can block. |

|

GFP_HIGHUSER_MOVABLE |

|

|

GFP_TRANSHUGE |

|

|

GFP_DMA |

Allocate from ZONE_DMA. |

|

GFP_DMA32 |

Allocate from ZONE_DMA32 |

Their meanings:

|

GFP_KERNEL |

__GFP_WAIT | __GFP_IO | __GFP_FS |

|

GFP_ATOMIC |

__GFP_HIGH |

|

GFP_NOWAIT |

GFP_ATOMIC & ~__GFP_HIGH |

|

GFP_NOIO |

__GFP_WAIT |

|

GFP_NOFS |

__GFP_WAIT | __GFP_IO |

|

GFP_USER |

__GFP_WAIT | __GFP_IO | __GFP_FS | __GFP_HARDWALL |

|

GFP_HIGHUSER |

_GFP_WAIT | __GFP_IO | __GFP_FS | __GFP_HARDWALL | __GFP_HIGHMEM |

|

GFP_HIGHUSER_MOVABLE |

__GFP_WAIT | __GFP_IO | __GFP_FS | __GFP_HARDWALL | __GFP_HIGHMEM | __GFP_MOVABLE |

|

GFP_TRANSHUGE |

GFP_HIGHUSER_MOVABLE | __GFP_COMP | __GFP_NOMEMALLOC | __GFP_NORETRY | __GFP_NOWARN | __GFP_NO_KSWAPD |

|

GFP_DMA |

__GFP_DMA |

|

GFP_DMA32 |

__GFP_DMA32 |

|

GFP_TEMPORARY |

__GFP_WAIT | __GFP_IO | __GFP_FS | __GFP_RECLAIMABLE |

|

GFP_IOFS |

__GFP_IO | __GFP_FS |

Basic flags:

|

___GFP_DMA |

Allocate from ZONE_DMA |

|

___GFP_DMA32 |

Allocate from ZONE_DMA32 |

|

___GFP_HIGHMEM |

Allocate from ZONE_HIGHMEM |

|

___GFP_MOVABLE |

Allocate from ZONE_MOVABLE |

|

___GFP_WAIT |

Allocator can sleep |

|

___GFP_HIGH |

High-priority: allocator can access emergency pools |

|

___GFP_IO |

Allocator can start block IO |

|

___GFP_FS |

Allocator can start filesystem IO |

|

___GFP_COLD |

Request cold-cache pages instead of trying to return cache-warm pages |

|

___GFP_NOWARN |

Allocator does not print failure warnings |

|

___GFP_REPEAT |

Allocator repeats (once) the allocation if it fails, but the allocation can potentially fail |

|

___GFP_NOFAIL |

Allocator repeats allocation indefinitely, the allocation cannot fail. Deprecated, no new users allowed. |

|

___GFP_NORETRY |

Allocator never retries the allocation if it fails |

|

___GFP_MEMALLOC |

User reserves |

|

___GFP_COMP |

The allocator adds compound page metadata (used internally by hugetlb code) |

|

___GFP_ZERO |

Zero out page content |

|

___GFP_NOMEMALLOC |

Allocator does not fall back on reserves (takes precedence over MEMALLOC if both are specified) |

|

___GFP_HARDWALL |

The allocator enforces "hardwall" cpuset boundaries |

|

___GFP_THISNODE |

Allocate NUMA node-local memory ponly, no fallback |

|

___GFP_RECLAIMABLE |

The allocator marks the pages reclaimable |

|

___GFP_KMEMCG |

Allocation comes from a memcg-accounted resource |

|

___GFP_NOTRACK |

Don't track with kmemcheck |

|

___GFP_NO_KSWAPD |

|

|

___GFP_OTHER_NODE |

On behalf of other node |

|

___GFP_WRITE |

Allocator intends to dirty page |

Memory allocation (slab allocator):

cache (pool) -> set of slab blocks (each is several pages) -> objects in each slab

struct kmem_cache* kmem_cache_create(

"my_struct",

sizeof(my_struct),

size_t

align,

// required alignment,

often L1_CACHE_BYTES, or 0 if SLAB_HWCACHE_ALIGN

unsigned long flags, //

SLAB_xxx, see below

void (*ctor)(void*)) // can be NULL

void kmem_cache_destroy(struct kmem_cache* cache)

|

SLAB_DEBUG_FREE |

DEBUG: perform extensive check on free |

|

SLAB_RED_ZONE |

DEBUG: insert "red zones" around allocated memory to help detect buffer overruns |

|

SLAB_POISON |

DEBUG: fill a slab with known value (0xA5) to catch access to uninitialized memory |

|

SLAB_HWCACHE_ALIGN |

Align objects within a slab to a cache line |

|

SLAB_CACHE_DMA |

Allocate from ZONE_DMA |

|

SLAB_STORE_USER |

DEBUG: store the last owner for bug hunting |

|

SLAB_PANIC |

Panic if allocation fails |

|

SLAB_DESTROY_BY_RCU |

Defer freeing slabs to RCU (read more in header file) |

|

SLAB_MEM_SPREAD |

Spread some memory over cpuset |

|

SLAB_TRACE |

Trace allocations and frees |

|

SLAB_DEBUG_OBJECTS |

|

|

SLAB_NOLEAKTRACE |

Avoid kmemleak tracing |

|

SLAB_NOTRACK |

|

|

SLAB_FAILSLAB |

Fault injection mark |

|

SLAB_RECLAIM_ACCOUNT |

Objects are reclaimable |

|

SLAB_TEMPORARY |

Objects are short-lived |

void* kmem_cache_alloc(cache,

gfp_t flags);

void* kmem_cache_alloc_node(cache, gfp_t flags, int

node)

void kmem_cache_free(cache, void* p);

Memory allocation (virtually contiguous pages):

Allocated pages are virtually

contiguous and not necessarily physically contiguous.

Less performant than kalloc() since need to update page tables and flush

TLBs.

void* vmalloc(unsigned long size);

void* vzalloc(unsigned long size); // zeroed out

void vfree(const void* addr);

void* vmalloc_user(unsigned long size); // memory for user-space, zeroed out

void* vmalloc_node(unsigned long size, int node); // allocate pages in specific NUMA node

void* vzalloc_node(unsigned long size, int node);

void* vmalloc_exec(unsigned long size); // executable memory

void* vmalloc_32(unsigned long size); // 32-bit addressable memory

void* vmalloc_32_user(unsigned long size); // 32-bit addressable memory for user-space, zeroed-out

void* vmap(struct page **pages, unsigned int count, unsigned long flags, pgprot_t prot);

void vunmap(const void* addr); // release mapping

void vmalloc_sync_all(void);

Memory allocation (physical pages):

struct page* alloc_pages(gfp_t flags, unsigned int order)

Allocate (2 ^ order) contiguous

physical pages, return pointer to first pages's page structure.

On failure returns NULL.

unsigned long page_address(struct page* page) // get physical address

unsigned long __get_free_pages(gfp_t

gfp_flags, unsigned int order)

unsigned long __get_free_page(flags)

Same as alloc_pages, but return physical address or 0.

unsigned long get_zeroed_page(gfp_t flags)

Allocate one zeroed page, return physical address or 0.

#define __get_dma_pages(flags, order) __get_free_pages((flags) | GFP_DMA, (order))

void __free_pages(struct

page *page, unsigned int order)

void __free_page(struct page *page)

void free_pages(unsigned

long addr, unsigned int order)

void free_page(unsigned long addr)

void* alloc_pages_exact(size_t

size, gfp_t flags)

void free_pages_exact(void*physaddr, size_t

size)

Allocate contiguous physical pages to hold size bytes, return physical address or NULL on failure.

struct page*

alloc_pages_node(int nid, gfp_t flags, unsigned int order)

struct page* alloc_pages_exact_node(int nid, gfp_t flags,

unsigned int order)

void* alloc_pages_exact_nid(int nid, size_t

size, gfp_t flags)

Mapping physical pages into kernel space:

void* kmap(struct page* page)

If page is in low memory (ZONE_NORMAL, ZONE_DMA) its virtual address is simply returned.

If page is in ZONE_HIGHMEMORY, a mapping is created and

virtual address is returned.

May sleep.

void kunmap(struct page* page)

Unmap mapping created by kmap.

void* kmap_atomic(struct page* page)

void kunmap(struct page* page)

kmap_atomic/kunmap_atomic is significantly faster than kmap/kunmap because no global lock is needed and because the kmap code must perform a global TLB invalidation when the kmap pool wraps.

However when holding an atomic kmap it is not legal to sleep, so atomic kmaps are appropriate for short, tight code paths only.

struct page* kmap_to_page(void * kva)

PFN:

unsigned long pfn = page_to_pfn(page);

if (pfn_valid(pfn))

page = pfn_to_page(pfn);

void get_page(page);

....

void put_page(page);

void *kva = page_address(page)

get

kv-address for a page in lowmem zone

or for a

pages in himem zone mapped into kernel address space

otherwise

returns NULL

if

(virt_addr_valid(kva)) //

for kva in lowmem zone

page

= virt_to_page(kva);

Pin user pages

in memory:

note:

obsolete, should use get_user_pages_locked|unlocked|fast

struct page *pages;

down_read(¤t->mm->mmap_sem);

long nr = get_user_pages(current,

current->mm, (unsigned long) buf, npages, int write, int force, &pages,

NULL);

up_read(¤t->mm->mmap_sem);

.... modify ...

lock_page(page)

set_page_dirty(page)

unlock_page(page)

page_cache_release(page)

Map kernel

memory into userspace (e.g. for mmap):

down_read(¤t->mm->mmap_sem);

err = remap_pfn_range(struct

vm_area_struct *vma, unsigned long addr,

unsigned

long pfn, unsigned long size, pgprot_t prot)

err = remap_pfn_range(vma,

vma->vm_start, vma->vm_pgoff, vma->vm_end - vma->vm_start,

vma->vm_page_prot)

up_read(¤t->mm->mmap_sem);

Map kernel

memory to userspace:

static struct vm_operations_struct xxx_mmap_ops = {

.open = xxx_mm_open,

.close =

xxx_mm_close,

};

static void xxx_mm_open(struct vm_area_struct *vma)

{

struct file

*file = vma->vm_file;

struct

socket *sock = file->private_data;

struct sock

*sk = sock->sk;

if (sk)

atomic_inc(&pkt_sk(sk)->mapped);

}

static void xxx_mm_close(struct vm_area_struct *vma)

{

struct file

*file = vma->vm_file;

struct

socket *sock = file->private_data;

struct sock

*sk = sock->sk;

if (sk)

atomic_dec(&pkt_sk(sk)->mapped);

}

.......

if

(vma->vm_pgoff)

return

-EINVAL;

size

= vma->vm_end - vma->vm_start;

start

= vma->vm_start;

for

(i = 0; i < ....; i++) {

struct

page *page = virt_to_page(po->pg_vec[i]);

int

pg_num;

for

(pg_num = 0; pg_num < ....; pg_num++, page++) {

err

= vm_insert_page(vma, start, page);

if

(unlikely(err))

goto

out;

start

+= PAGE_SIZE;

}

}

vma->vm_ops

= &xxx_mmap_ops;

Memory

leak detection (kmemleak):

http://www.kernel.org/doc/Documentation/kmemleak.txt

Page allocations and ioremap are not tracked.

Only kmalloc, kmem_cache_alloc (slab allocations) and

vmalloc.

Build with

CONFIG_DEBUG_KMEMLEAK

To disable by default:

build with CONFIG_DEBUG_KMEMLEAK_DEFAULT_OFF

boot with kmemleak=on

mount -t

debugfs nodev /sys/kernel/debug

cat /sys/kernel/debug/kmemleak

Clear the list of all current possible memory leaks:

echo clear > /sys/kernel/debug/kmemleak

Trigger an intermediate memory scan:

echo scan > /sys/kernel/debug/kmemleak

cat /sys/kernel/debug/kmemleak

Disable default thread that scans every 10 minutes:

echo 'scan=off' >

/sys/kernel/debug/kmemleak

Dump the information about object at <addr>:

echo 'dump=<addr>' >

/sys/kernel/debug/kmemleak

Also

/proc/slabinfo

Time:

HZ tic ks (jiffies) per second, typically 100 or 1000, also can be NOHZ.

User-visible value is USER_HZ, so when communicating to userland scale like x

/ (HZ / USER_HZ).

Jiffies has unsigned long and 64-bit values (jiffies, jiffies_64).

unsigned long volatile jiffies;

// wraps around if BITS_PER_LONG < 64

u64

jiffies_64;

On x86 they are separate (but jiffies

can overlay lower word of jiffies_64).

On x64 they are the same thing.

jiffies may be read atomically.

jiffies_64 on 32-bit architectures (BITS_PER_LONG < 64) must be read

under seqlock_t jiffies_lock.

Can always use get_jiffies_64(), on 32-bit